![]()

It’s not difficult to read and listen about the wonders of Embarcadero DataSnap technology around the world. I attended the Delphi Conference 2011 and 2012 in Sao Paulo, Brazil, after the release of versions of Delphi XE2 and XE3, and the words “performance” and “stability” are not spared when the subject is DataSnap. Apparently a perfect solution for companies that have invested their lives in Delphi and now need to reinvent themselves and offer web and mobile services. But does DataSnap really work on critical conditions?

I found some references from other people talking about it on StackOverflow:

- How to do a REST webserver with Delphi as a backend for a big web application?

- Using REST with Delphi

The company I work for stands as one of the five largest in Brazil when it comes to software for supermarkets. The main product is a considerably large ERP developed in Delphi (for over ten years). Recently we initiated a study to evaluate technologies that would allow us to migrate from client / server to n-tier application model. The main need was to be able to use the same business logic implemented on a centralized location on different applications.

The most obvious option is the DataSnap, which works very similarly to what is found in legacy applications. It can drag components, using dbExpress, for example.

We tested the DataSnap technology in order to know what level of performance and stability it provides and to verify if it really meets our requirements. Our main requirement was the server’s ability to manage many simultaneous connections, since the application is big and used by many users.

Objective

Our objective was to test the DataSnap REST API and to answer some questions:

- How does it behave in an environment with many concurrent connections?

- What is the performance in a critical condition?

- Is it stable in critical condition?

Methodology

The tests are based on a lightweight REST method without any processing or memory allocation, returning only the text “Hello World”. The DataSnap server was created using the Delphi XE3 wizard and implemented the HelloWorld method. The testing was performed on all types of lifecycles (Invokation, Server and Session) and also with VCL and Console application. However, as the results were identical, the presented data is only based on the results obtained using the console version with “Server” lifecycle only.

We decided to create a few servers based on other technologies, not necessarily similar or with the same purpose, just to have a basis for comparison. Servers have been created using the following frameworks:

- mORMot (Delphi)

- ASP.NET WCF

- Jersey/Grizzly (Java)

- Node.JS (JavaScript)

As you can see, those are totally different frameworks, some with different purposes, in different languages. I want to make clear that we do not establish a comparison between the performance of frameworks, because we do not dominate these technologies. We will use them simply to get a sense of how they behave compared to DataSnap.

The hardware environment used in the tests:

We used the Jmeter tool for testing. The Jmeter is a great tool developed in Java specialized for server performance testing. Before anyone questions the use of this software, I obtained similar results with a software developed by myself in Delphi. The only problem we had was with faster servers, if we kept the focus on the results screen the tests were affected because the screen refresh rate delays the sending of HTTP packets to the server, but we took care for this not to occur during our tests.

Tests without concurrency

The first tests that were executed without concurrency in order to visualize the behavior of servers in a less critical situation. We did two tests, one with 100.000 and another with 1 million requests. This allowed us to evaluate whether the server behavior changes with increasing requests.

Performance testing (Requests per second) obtained the same result in both tests (100 thousand and 1 million requests):

We observe in this test that the other servers are practically 13 times faster than Datasnap, the difference is huge. It is also possible to note a bottleneck (since all other servers have obtained the same result), possibly in the amount of requests that the client machine can send using a single thread.

The tests of memory consumption had differences in the two tests (100 thousand and 1 million requests), so let’s look at both:

NOTE: The memory consumption of Java server needs to be evaluated carefully because it is the measurement of the java virtual machine.

In this graph we see two different behaviors. MORMot, WCF and Node.js servers use the same amount of memory to perform on both tests. The increase of requests made both the Java and DataSnap server raise their memory consumption significantly to get us worried enough.

Why is the server consuming so much memory only answering HelloWorld requests? Before seeing the results I would bet that none of the servers would use as much memory just to answer this simple request.

In the case of DataSnap, we note that even if the tests continue, without any pause to the server, executing 10 million requests for example, the memory consumption follows the trend of rise (to infinity and beyond). We consume up to 1GB of memory, only an answering HelloWord, how to explain this?

Another interesting detail is that to stop the tests, gradually and very slowly (taking hours in some cases), the memory consumption of the DataSnap server backs up to the level it was when it opens. Leaves the question, if the server works 24/7?

Tests with concurrency

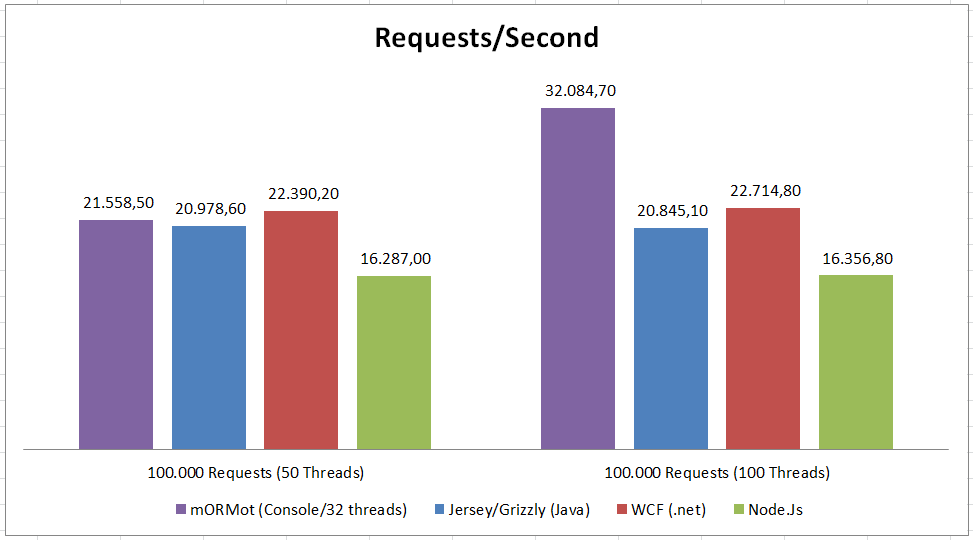

These tests aim to stress the server and verify the maximum efficiency in the management of his requests and HTTP packets. We did two tests, one with 50 and another with 100 threads. Consequently 50 and 100 simultaneous requests, respectively. Each thread should send 100.000 requests to the server, totaling 5 and 10 million requests in the respective tests.

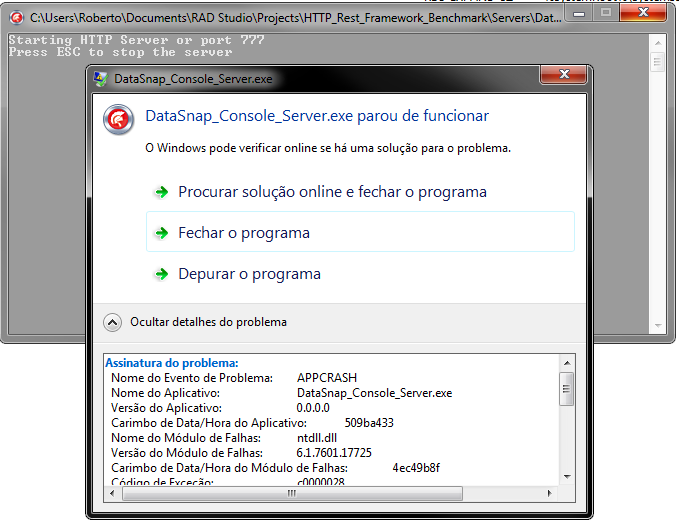

To my surprise and disappointment, it was not possible to perform this test with the DataSnap server. I tried several times and the server simply crash.

Evaluate the results of the other servers only.

Node.js got the worst result, but do not be fooled, because he got this result using only a single core. It is a limitation of the architecture of that framework, but I could, for example, have four instances initialized it and made a load balancing, which would bring me a performance higher than that obtained in this test. Unfortunately I did not had time to implement this solution.

The ASP.NET WCF and Java servers had very good results, with a certain advantage for WCF. Both had the same results with 50 and 100 threads, which indicates that this is their limit in this environment and hardware. During testing we noticed these servers use almost all hardware resources, while the client application worked at 60%, which indicates the limit on the server.

The mORMot server surprised me, firstly, the excellent results in the test with 100 threads, and then by the way he got this result. In contrast to what occurred in the Java and WCF server, we noted a use of only 50% of the resources of the server machine, while the client machine was at 100% of processor usage. This indicates that there was a bottleneck in the client application, and if there were a better client machine or more machines (in the case of a real application) the server could be even faster.

Considering the performance and especially efficiency, mORMot gave better results using these implementations, but in general, all of them had very good results, except the DataSnap, who did not complete the test.

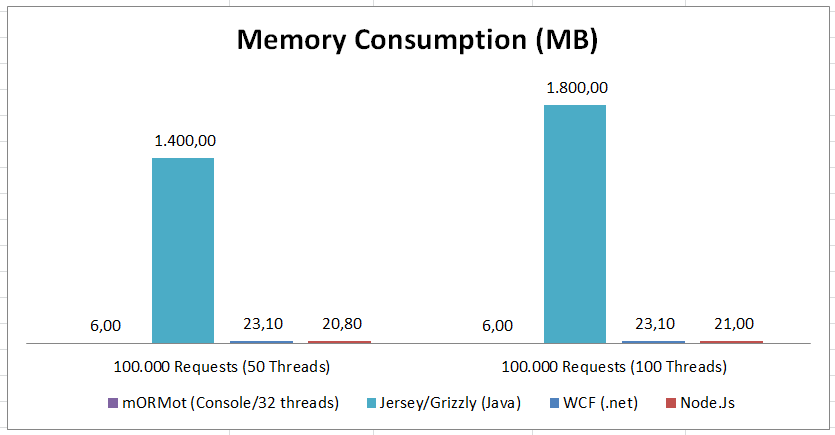

The results of memory consumption remained similar to previous tests to mORMot, WCF and Node.Js servers. The Java server followed the trend already observed in other tests with a memory consumption proportional to the number of requests, at least concerning.

Conclusion

We conclude that the DataSnap has a serious stability problem when placed in an environment with concurrent connections. The server stops working even when we put only two concurrent connections under stress test, which is very worrying.

Watching the performance, we observed that the DataSnap is much slower than the other solutions tested.

Overall, I can say that all the other frameworks have the performance and stability that is necessary in a large application. In particular, the mORMot surprised me with a performance truly outstanding. The guys at Synopse are doing a fantastic job.

Why is the DataSnap so slow? And why it crashes?

Difficult to specify the cause of these problems without knowing all the DataSnap structure and without having participated in its development, but I have a theory.

Apparently, the structure of the HTTP communication framework makes use of the Indy components, which creates a thread for each HTTP request. This behavior causes the server to create and destroy dozens of threads per second, which causes a huge overhead. This overhead is certainly the cause of poor performance of the framework. Hard to say which is the cause of the problem that crashes the server but I believe it has a connection.

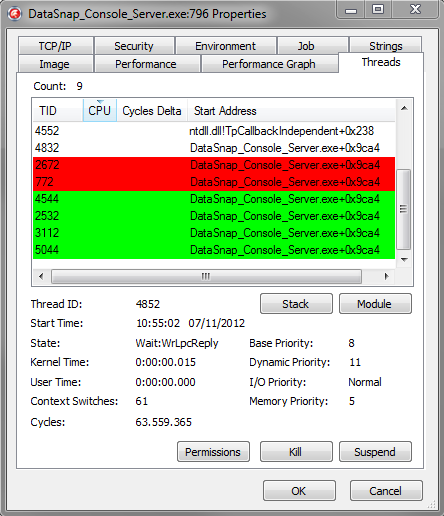

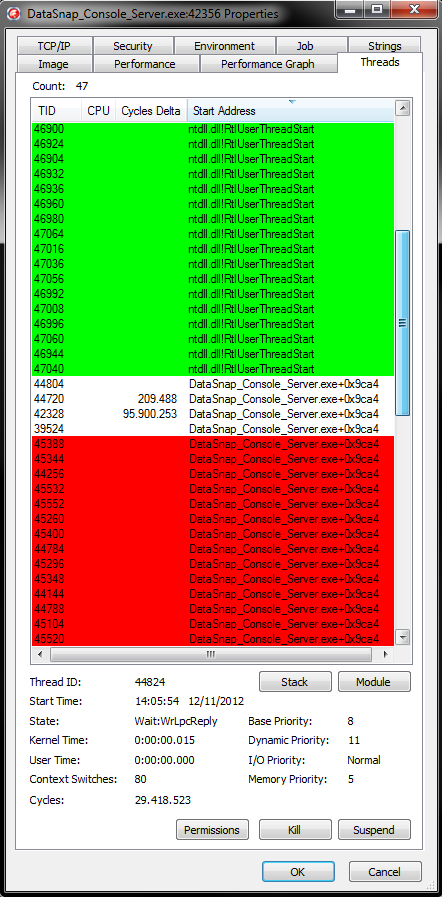

With the help of ProcessExplorer we view this overhead. This graph shows the threads that have been created recently (green) and those that are being destroyed (red).

Status of the first test:

Status of the second test with 100 threads, captured before the server crash:

In this image we can see that the server was running 47 threads and the list of threads being created and destroyed is huge.

Another important detail is that practically all consumption of CPU in DataSnap server is overhead in creating and destroying threads.

Final Thoughts

I’ll end with a quote from the text of Marco Cantù (Currently, Delphi Product Manager) in the book Delphi 2010 Handbook.

I think that if you want to build a very large REST application architecture you should roll out your own technology or use one of these prototypical architec-tures. For a small to medium size effort, on the other hand, you can probably benefit from the native DataSnap support.

All the source code of the servers, as well as the binaries and the Jmeter test plans are available for download at https://github.com/RobertoSchneiders/DataSnap_REST_Test.

I personally spoke with some staff of Embarcadero in São Paulo, on 10/23/2012 (Delphi Conference) about this problem. We informed Embarcadero of Brazil about the partial results of our tests on 10/30/2012 and sent the final results of the tests presented here on 11/14/2012 by e-mail. So far, nobody of Embarcadero offered support or any solution to the problem we are experiencing.

To all of the people involved in this testing period, I leave my thanks. I would like to thank Eurides Baptistella (Systems Analyst, Sysmo Sistemas) that created the Java server and helped in the preparation and execution of tests. To Adriano Baptistella (Systems Analyst, Sysmo Sistemas) for creating ASP.NET server. To Dean Michael Berris (Software Engineer, Google) and Everton Antunes de Oliveira (Development Research Analyst, Pixeon Medical Systems) per help with creating a c++ server (cpp-netlib), who unfortunately could not finish in time for use in testing. To Cesar Arnhold (Third Engineer Officer, Maersk Supply Service) and Mateus Artur Schneiders (Systems Analyst, QuickSoft) for reviewing this text.

If you liked this feel free to leave your comment.

This post continues on DataSnap analysis based on Speed & Stability tests – Part 2

Hi, I came here by a stackoverflow link.

I have read this article with great interest, because my company decided to migrate to the datasnap technology.

Thanks for this very detailed analysis.

We have reproduced the tests with Delphi 10.1 Berlin (using JMeter), and I can confirm that the results don’t change compared to what you reported.

In addition, the lack of datasnap documentation is another serious problem.

Thank you Danilo. I really appreciate that information, people ask me all the time if datasnap is fixed in the latest Delphi version and it is hard to know for sure although EMBT is not actively working on datasnap for years.

Unfortunately, it seems that the DataSnap technology no longer part of the main line from Embarcadero (now IDERA). This is very funny as the Official Brazilian events continues to encourage the use of this solution for any corporate environment. The absence of an effective solution to such as attractive technology is very bad for everyone (community and manufacturer). Congrats for your blog!

> Unfortunately, it seems that the DataSnap technology no longer part of the main line from Embarcadero

Where did you hear this?

As far as I know DataSnap is absolutely a part of the main line and will continue to be. Which is good, because I use it a lot.

Hi Bruce, I’m a DataSnap enthusiast too. I have not heard anywhere, it’s just a personal impression, once the “problem” shown in this post (XE2 e XE3) still persists in Delphi Berlin.

If you are a DataSnap enthusiast, then you probably followed a bunch of the changes that happened afterwards.

It’s still no Node.js, but I would love to see the tests repeated with the latest version.

IT IS REALLY A GOOD WORK.

As a software developer and a Borland’s products fan since Turbo Pascal and Turbo C in 90`s, I attended one of Embarcadero’s conferences in Toronto, Canada about 5 years ago talking about the benefits and capabilities of Datasnap, so “David I” managed to convince me to buy RAD Studio XE3 EE and started migrating one of my big and productive Client/Server software to n-tier architecture based on Datasnap. After wasting significant time and effort and facing complications in transferring streams and other tech.s, I reached to the fact that it was not the right choice. Since then I switched to JEE, JSF, and JFX where it needs some effort in the beginning to understand their frameworks and get ready but easier to implement and to deploy. FINALLY, I SAID GOODBYE TO DELPHI AND C++BUILDER.

All of your images are gone so we can’t see the benchmarks.

I hope you have them backed up somewhere so they can be restored

For the web page:

https://robertocschneiders.wordpress.com/2012/11/22/datasnap-analysis-based-on-speed-stability-tests/

Thanks for letting me know, I haven’t realized that. I’m not sure I still have this images, I’m will take a look.